September 2024: The glasses are the way

A platform shift is afoot. Both tweets reflect that people in the technology industry (me included) are constantly searching for the next “platform”, and LLMs on some sort of new hardware might be it. I think Esther is right.

Since the smartphone, contenders for the new dominant form factor have been(in no particular order):

Tablet (kindle, iPad etc)

Wearables

Chest (AI Pin, Limitless, Friend)

Wrist (watch, whoop)

Home Speaker (Echo, Homepod etc)

(Another) thing you hold in your hand (rabbit etc)

Glasses (Google Glasses > Spectacles > Meta Ray Bans)

VR/AR headsets (Oculus, Valve Index, Vision Pro)

I’m sure I’ve missed some. I’ve tried many of the above, and with Meta Ray Bans out in the wild, I’m pretty convinced that glasses (as a form factor) are the closest we’ve come to a “platform” that can rival mobile in terms of breadth. The Meta Ray Bans:

do things smartphones can’t do

do some things much better than smartphones

do some things as good as smartphones with less cognitive load and

All these things happen without competing directly with your phone for your attention

Things your phone cant do

Try doing this with your phone. [1]

Do things better than your phone

The Meta Ray Bans are the first wearable in a long time that allow the wearer to be present and in the moment, and critically that provide the illusion to the wearer’s companions that the wearer is present and in the moment. This feels like a huge deal - once you realize it, you can’t unsee your phone as a distraction engine.

We’ve all experienced telling someone something important, intimate or intricate/complex only for their phone to ring at the wrong time, or for them to look at their screen or wrist.

Glasses are discreet, the wearer’s attention remains focused on their subject or companion, and the notifications manage to be simultaneously more private than a phone ringing with a call or vibrating with a notification while communicating even richer context than either. Wearing this device as a parent is (literally) eye opening. In addition to the discretion & presence it affords you (which already is a lot) it opens up an actually new perspective that is richer/more present for the wearer, AND ALSO impossible for smartphones to replicate. Pretty surprised Apple didn’t ship this first (I know I know Apple doesn’t prize being first to market and still ships market leading product, but still).

The next form factor can’t compete with your hands

Pretty much every hardware successor to phones bill themselves as “not a phone replacement”. This makes sense because phones are insanely versatile and get better every year. Your phone is a compass, calculator, flashlight, still + video camera, credit card, computer, phone (yes an actual phone), communication device, and more. At this point smartphones have been the beneficiary of trillions in R&D investment after they had product market fit. It would be silly to compete head on, and the odds are that they will continue to incrementally improve for decades.

A problem with the pin + companion model is even though they’re marketed as “not a phone replacement” they require you to use your hands. If I had a phone use case that required both hands (eg fast typing for any reason) I couldn’t use a Rabbit (from my test drives the voice input on the AI pin makes it slightly more versatile, but it just doesnt really do anything better than the other device I have in my hand). Inherently I think this meant I was using a much less capable (at least in my perception) device in my hands, just to access LLMs which my apps were kinda better for/mostly as good as. Not saying these devices aren’t good - if they appeared out of thin air in a world without the iPhone they’d probably be just fine/have incremental utility vs. Nokia. But we don’t live in that timeline. Just pointing out - the iPhone is an insane competitor for hand-space, and I think it’s extremely hard for these “hand” alternates to even matter as a result.

To be specific, the Rabbit R1 is functionally a phone substitute because it requires at least one hand to be used, and the phone is already an exceptional hand device. We’ve been trained over the years to use our phones in one hand and eat, drink, or otherwise hold an object in the other. Fundamentally if you need one hand free, you will frequently have to choose between the R1 and your phone.

In contrast, while the AI Pin is not a phone substitute, it actually probably has some advantages over the phone; default voice input, the projector, translation etc. Despite that, a lot of its operating modes require a hand to be occupied, and its user model maps pretty closely to that of a phone (ie you’d use it for a lot of the same functionality).

So, while the experience is different, as long as a user perceives it as competing for headspace, the outcome is not better enough vs a phone. Both devices have other fundamental problems (eg you need to maneuver your whole chest to take a photo, or tap it to activate voice etc) but my point is even if there were ways around these, I think trying to replace the dominant hand device of our time (the smartphone) with a lower utility hand device is a hard slog.

The seed of a very interesting tree

Anyone who wants an LLM on hardware can obviously use chatGPT on a phone, but smartphones are distraction engines. Unlocking the phone, navigating to chatGPT and then opening the app while dodging notifications just feels like unnecessary overhead.

I never used the Google Glasses so I don’t have a direct comparison, but my perception is the difference now is LLMs are now good enough to do a lot of things with voice input, which wasn’t true until recently.

Within a couple of weeks of wearing the Meta Ray Bans, I’d already built muscle memory around saying “hey Meta”, using touch, taking photos etc, and most importantly it was easy for those things to be reflexive. I wanted to wear it. When using it, so many things make it obvious that it’s an MVP, but if you think of an MVP as the seed, the tree it could blossom into is probably the most interesting/has the greatest amount of unexpected upside of any wearable I’ve used in recent years. Here’s why.

Different and better

The R1 & AI Pin are not fundamentally better than the other thing you could hold in your hand. They are slightly faster ways to access LLMs, but they don’t yield any output that is different and better than what you could get with a phone (either technically or from an experience perspective). The Meta Ray Bans are fundamentally better than the other thing you could put on your face (glasses/sunglasses). Given that the current version already fits on something stylish/nondescript that you can wear in public without getting weird looks (which wasnt true for the Google Glasses), the social cost of trying them is relatively low, and the benefits are material in comparison.

Said slightly differently, the other thing you can hold in your hand is a supercomputer with multiple ways to create & consume all the world’s knowledge. The other thing you can wear on your face is a disconnected piece of plastic.

“Different” in this context means the consumer experiences using the device as different. Eg iOS is a different system from Android, but the consumer experience around interacting with apps and use cases in the real world are basically the same between the two. “Better” in this context means more utility, new utility or “compound utility” (eg collapsing what might have been multiple steps into a single input / output flow).

Meta Ray Bans are different and better (at enough things) than both smartphones and regular glasses to stand out.

We all wear glasses

Big Glass would have you think that >60% of Americans wear prescription glasses. Given how many people dont receive care that they should, and the effects of sunlight on eyes, the actual TAM of people who wear glasses or sunglasses (or should) is probably close to 100%. That’s not actually true for something like the watch (and never has been); watches historically were primarily a fashion choice. Glasses in contrast can be fashionable, but are a utility; lots of wearers can’t see without them. Smartwatches made a good run at turning a watch/wrist device into a utility, but watches in total are still only worn by 35% - 40% of humans. There’s no reason to believe that (as the utility expands and the cost drops) every human might wear glasses loaded with a camera, speakers and an LLM.

The install base of glasses is already 4 billion, and the potential install base is all humans because every human would derive utility from sunglasses simply by standing in the sun. This isn’t true for anything else.

What could be better

I’ve heard anecdotally that the Meta Ray Bans are internally considered an MVP (as in there’s lots of work left to scale the product), and in using them I get why that’s the case. Some interactions around photo transfer, LLM speed, logistics of literally just getting the device delivered, prompting, and the kinds of answers you get from the LLM just obviously could be better, and I suspect are being worked on and not worth dwelling on. Some of the main hurdles that I think exist are around what it means to have a surveillance device on your face all the time, and the model constraints/limitations.

Surveillance

The prevalence of smartphones make them effectively surveillance devices; there are probably very few situations that exist in public spaces today where you’re not being recorded. The more “interesting” the situation you’re in, the higher the odds you’re being recorded. Even if there are no security cameras, someone probably has you in still or video footage at almost all times publicly. This dynamic will only get worse if everyone’s walking around with a camera on their face.

I don’t have a good answer for this. Every single additional device that gets connected effectively exacerbates this problem, and I don’t know if there’s a way to reverse it. But if you think about or feel sensitive about privacy, it’s hard not to get the “ick”. The Meta Ray Bans attempt to solve (at least a part of) this with a light informing onlookers about the camera being on. Just doesnt solve for the more elemental dynamic that these things will exist and be prevalent and will inevitably be used in unintended ways.

Model Constraints (or Limitations)

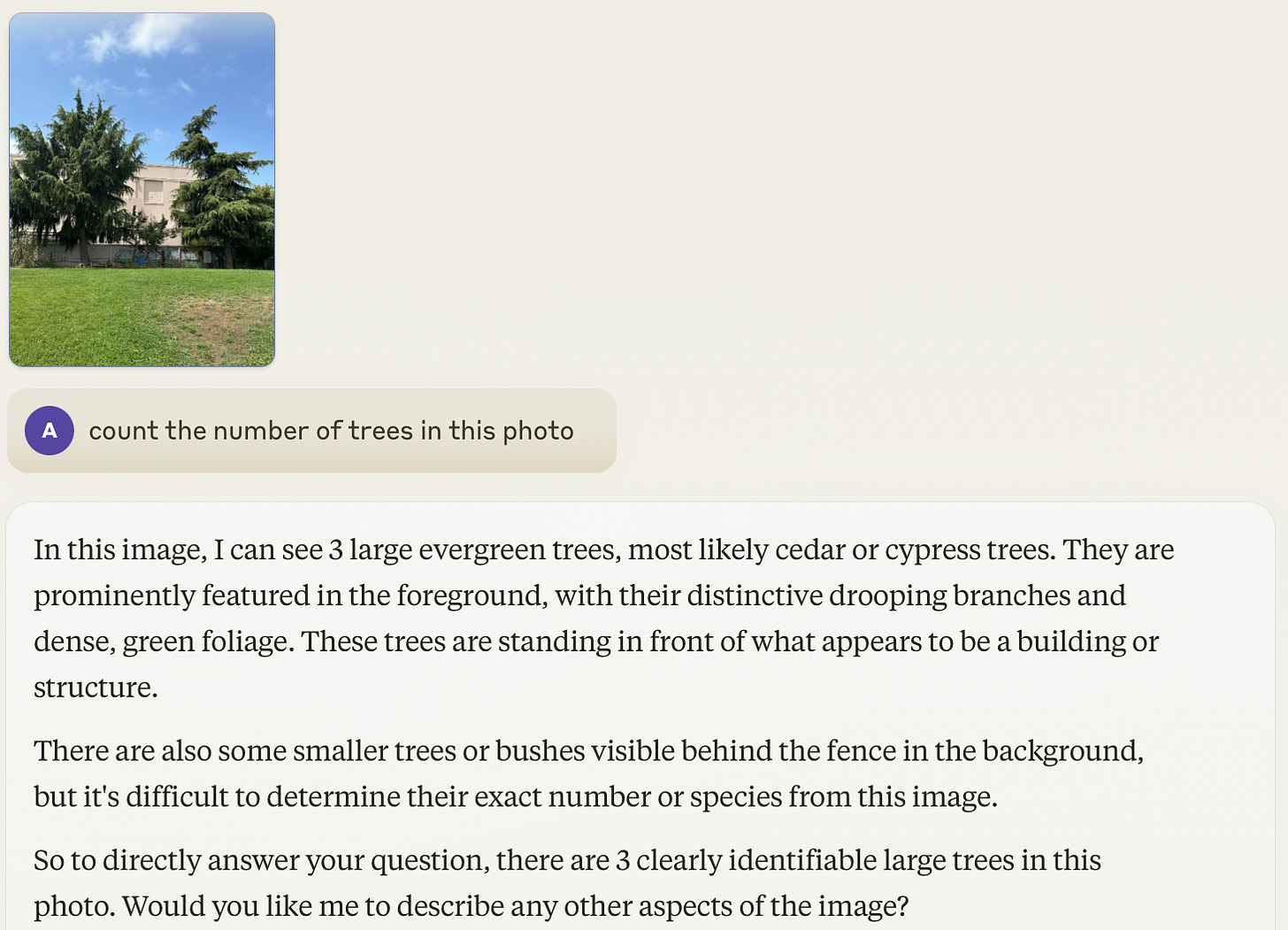

In using the glasses for simple tasks I’ve run into a handful of strange edges. I can’t yet tell whether they are limitations on the model quality or constraints (eg in a system prompt). For example when I asked to count the number of s in this picture:

Claude correctly counted 3.

In contrast all I got was “there are some trees in the photo”. I can’t tell if this is a Claude vs Llama issue, or the system prompt or something else.

If anything, the largest hurdle is probably that the cost of these devices to manufacture and the amount of after market service they’ll require (when they break, get wet, or otherwise malfunction)

Surprise, delight, and utility

Beyond providing “presence” and beyond the new perspective (which I think are just head and shoulders better than the alternatives in a really subtle way), there’s a bunch of incremental utility that can work better in the glasses modality, and are DEFINITELY better than the phone. The glasses are not there yet, but you can easily draw a linear path between what exists today, and this future

Many surprises & much delight

There are many things a wearer wouldn’t expect, which are well within reach. They’re just a novel configuration of technology that already exists today. Examples in bullets as a TLDR:

Camera + Llama means the glasses can give you situational awareness in a way that your phone cannot

Cameras don’t always have to be in the front (way more useful )

Look and ask

As designed, this is fine. However, the ingredients are there to make this magical. The magic sauce with look and ask is telling the wearer what is in their field of vision that they do not know is there

The magic of an LLM is it has/can have/can synthesize infinitely more context than you can, (theoretically) faster than you can. Not taking advantage of that to surprise and delight is a loss

Look and Annotate: take a photo and annotate it; eg

Identify all the people/plants/cars/stars in this photo

How fast was that car going

Scan a document and generate a translated version in {language of choice}

How tall is that tree

You can engineer surprise and delight by bringing unknown or difficult to find information back in the response of any open ended question. Over time this can become incredibly personalized; tell the wearer the specific genus of every tree in their field of vision, or the specific distance of every structure, or the specific model of every car. Look and ask becomes a joy, because every time the wearer uses it, they get more specific information than they would have thought to ask, about the environment they inhabit (rather than general information which mostly they can probably deduce from vision and context cues).

Utility

Some of the types of questions I think glasses + model can solve, that I’d like to see:

Camera driven translation of exactly what you’re looking at

Audio translation of what you’re listening to (similar to the AI Pin and the new Pixel Babel devices). Unlike the AI pin, this interaction can be invisible to the world. That being said, it’s obviously harder for the device to translate back what you’re saying

Look at a house and tell me what it cost on zillow/redfin

What price is this on Amazon

What are the dimensions of this window

Whats the fastest way to get to X

How far is Y

How large is this plank

The theme in the utility use cases is compressing multiple steps into a single question. If I wanted to know how large an object was, I could go get a measuring tape and measure it. If I could ask my glasses, then there are all sorts of products in the world that currently exist that the casual or occasional user no longer needs (kind of similar to the accelerometer, gyroscope and more in the phone).

Eyepods // Apple Vision SE

In many ways Apple is a really obvious company to build a device like this. The fact that Meta nailed the “presence” concept makes it really surprising that the #1 lifestyle/privacy oriented consumer device company hasn’t done this yet. To their credit Apple’s famously rarely first to market and still has such an insane brand halo + technical & design & distribution edge that whenever they get to making glasses, they’ll do just fine.

But when you know a secret you gain the convictions to do unorthodox things that others without that conviction would find challenging. Meta as an organization knows that secret (that there’s lightning in a bottle in this product), and Apple probably had a chance to deny Meta that secret by sucking the oxygen out of the room, and missed it.

In practice, Apple wont struggle to make connected glasses that are beautiful, loaded with an LLM and deeply tied into the Apple ecosystem. The one area I think Apple will struggle is this; they’re pretty deep in the “App” paradigm, and all it entails (icons, app specific authentication etc). I have a deep suspicion that there’s a new interaction paradigm to be born here, which will require a non-app abstraction (navigating to a specific app really does break attention in a way that kills the magic of this product).

Beyond this wearable glasses will probably eventually be directly connected to the internet (vs via your phone), include projection in your visual field, etc (since I wrote this Facebook has previewed Orion and Snap has launched Spectacles). These will all unlock material incremental use cases, but I’m focused here on things that are possible to do with the existing hardware and software that exists.

All this is a long way of saying I’m 99.9% certain that 2 decades from now billions of these will be on peoples heads. Glasses will be larger than the watch, and probably as large as the smartphone. And they’re already here.

Thanks to Kevin Kwok, Robert Andersen and Aaron Frank for reading this in draft form.

[1] The only words this picture doesn’t say is that you could replicate this perspective with a GoPro strapped to your head, but who wants to wear one of those all day?