January 2026: Synthesis is the new bottleneck

We’re in a time where you can produce far faster than you can understand

My hypothesis is that 3 things are true, which are not widely understood yet:

There’s a type of task that used to be extremely expensive to do, that has become dramatically cheaper. Many more people can do it than used to be true even six months ago, and most of them are not aware that they have the ability and as such, the value of this task is deeply mispriced

Because of #1, our capacity to produce is outstripping our ability to understand.

IDEs + coding agents accelerate #1 and #2.

While writing this I ran into this tweet:

This essay expands on what I think is happening from a non-engineer perspective,and some implications.

Task Arbitrage

I, like almost everyone I know, have unexpectedly experienced a handful of productivity breakthroughs over the last few months.

Most recently, some new habits I’ve adopted which prompted this realization:

every night before bed I fire off a few tasks either in Claude code, conductor or cursor

I every morning check on the tasks from yesterday, set up some new ones and go about my day

I’m not an engineer, yet this set of habits have dramatically expanded my productivity mostly in ways I didn’t expect. The experience has also yielded a few surprises.

The first surprise is, there are an increasing number of technical tasks that I didn’t previously consider as coding, but are really amenable to code based approaches. A lot of the time these are tasks I would have done in excel or google sheets, often by sampling. Now, I’m asking a coding agent to do it in natural language, reviewing the outputs and iterating. At a minimum this allows my sample size to be much larger. More frequently I get to the work faster because I can get to a working version of the actual task much more quickly than before.

A second related surprise is I’ve experienced the greatest leaps and productivity using coding agents in an IDE versus using a prompt interface.

The third surprise is more of a realization. It doesn’t feel like AI will kill SaaS for a lot of SaaS use cases, because shipping a SaaS product (or really any product) is not just functionality; it’s SLAs, support, maintenance, precision, polish etc. Instead AI (And coding agents in particular) enables software to exist that wouldn’t otherwise be built. These tasks may never be sold or turned into a company or a tool, or may be too personalized to the way a particular individual or company works. Far below the threshold for a company to exist, there exists a ton of tasks that software can now do, and its now cheap enough for a non-engineer to build with a coding agent to do the task for them and throw it away (yeah I had coding agents build me a bunch of stuff I only used once and never looked at again). Even in my case for a lot of tasks I spend time on, it would never make economic sense for a whole company to be built around it because they are either too speculative (the payoff is not clear) or too niche (even if the payoff is high, there’s not enough transactions or repeat activity to sustain a company). In addition, as a former PM, you can now validate a lot of assumptions before ever asking your engineers or designers to get involved.

Some examples:

Built an app that aggregates several different search strategies for finding the right healthcare payer entity, given only a payer name. This is inherently probabilistic, and we only know if it works if the payer actually successfully responds. As we test these we incorporate them into our core payer search.

Automated several QA tasks that I’d have otherwise done as a sampling process (literally checking individual examples by hand). My default now is to develop a script to check the dataset with a small sample size, then scale up once i have outputs I like. Setting this up takes about the same amount of time as checking a sample like I would have done manually, except now I can run it over the entire data set instead of eyeballing a few.

Built a classifier to help analyze long tail errors for payer EDI messages

Built an explorer that helped aggregate and analyze large volumes of payer PDFs

Built an app to aggregate my todos across different apps into Apple Notes

This combination of tasks are reasonably different from one another in terms of substance, the context you need to do them well, etc. I find the collapsing of context and the easy production of a V0 to be super compelling, and there are simply enough tasks that I just wouldn’t have gotten done before. And I’m just at the stage where I’m getting comfortable that some of these tasks can start to run continuously without much intervention. Overall it clear is that there is a type of task that used to be very expensive to complete and now is dramatically cheaper. In many of these cases, I just woudlnt have done the task. This arbitrage is not priced in yet.

Production Capacity > Synthesis

The other thing that’s clear that I’m not sure what to do with is; I find my actual production capacity to be much less constrained for a certain type of task and that definition/type expands every day.

And I find the hard thing to be: now you’ve completed all these tasks that are reasonably diverse in all these different parts of your workflow or your business; actually synthesizing them ( ie consolidating them to make leaps or understand the second order effects), is no longer straightforward.

By synthesis here I mean[2]

You complete a task, have outcomes, and those outcomes have an effect on the outside world

You observe those effects, and they either converge with your understanding of how the world should work, or diverge

You incorporate that convergence/divergence into your understanding, and now have a new, improved understanding (or at least realize that your understanding is more broken than you realized)

You form a new hypothesis, which leads to a new set of tasks (or an improved ranking of what you thought you had to do next)

Crucially synthesis is not just blending all the threads together. It is also understanding the parts of the puzzle that are missing as you go along and realizing that you need to go seek them out. New understandings often raise new questions.

This process is so nuanced; it requires judgement, intuition, relating to context that might not be directly present in a task (like think of doing a specific task at your company and realizing something material about a corporate priority is incorrect ). Just super hard to imagine how you’d compress this in the way you can compress a task.[3]

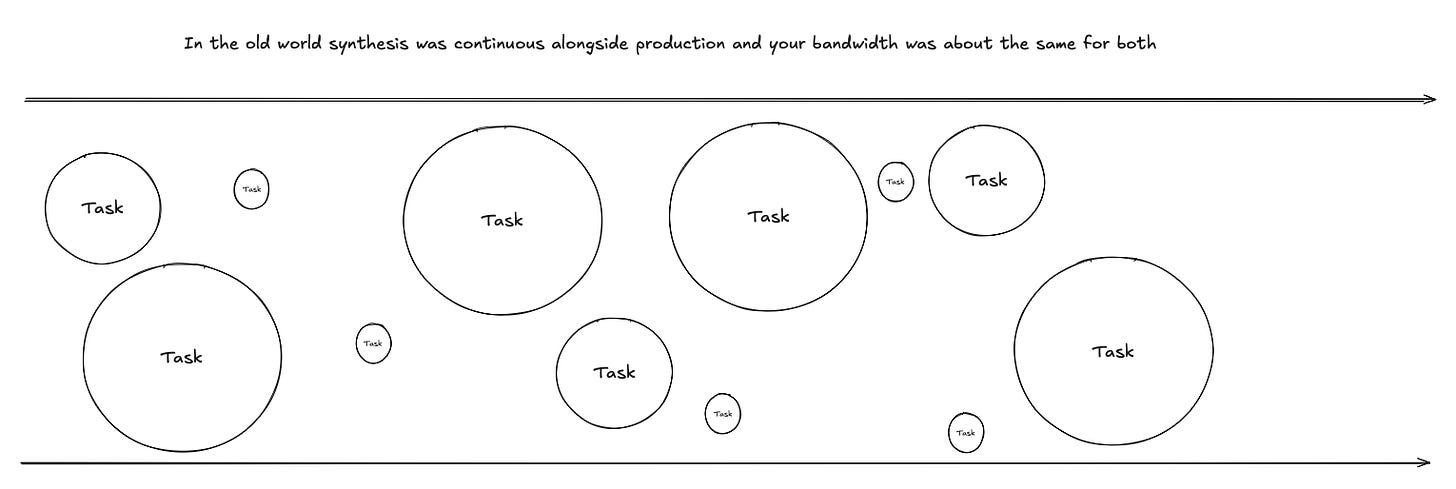

In the old world you had some grunt work that you had to do. That grunt work took time, and as you spent time in the weeds and details, you were kind of continuously synthesizing what you’d learned over and over again. As a result, the amount of consolidation and synthesis you have to do at the end to really understand the implications of the work that you’ve done, was actually relatively little relative to the overall amount of time you needed to spend on the task.

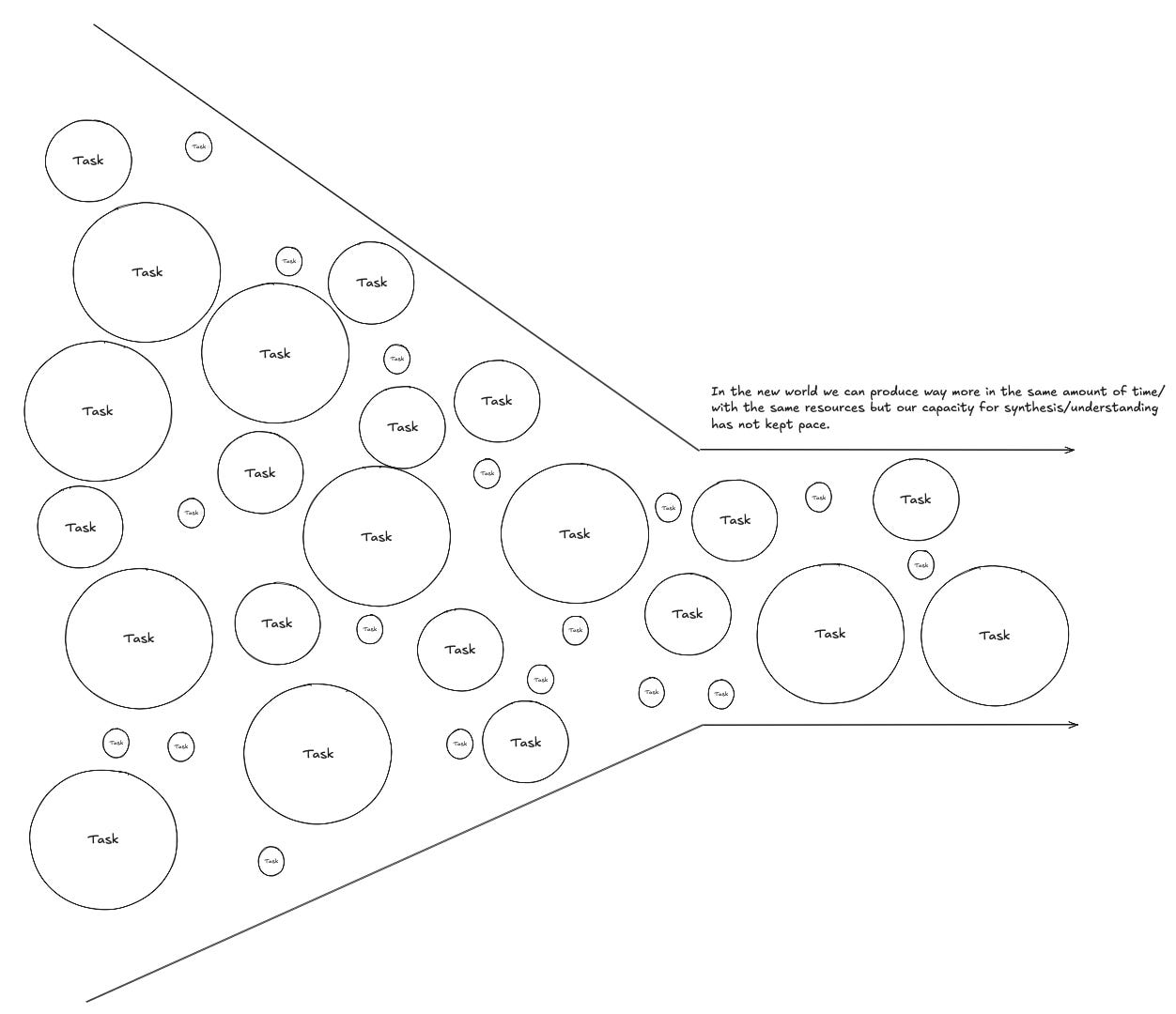

But now certain categories of grunt work are going away (ie the time required to do them is shrinking), while the time for and process of continuous consolidation/synthesis has shrunk. It might even be possible that there’s detailed nuance and depth that’s now missing, that you only realize when you’re in the middle of doing it, but I haven’t gotten to that realization yet. So now what I find personally is that synthesizing all these threads and bringing them together to realize a novel thing is hard and scarce.

Imagine you had a bunch of grunt work that was blocking something downstream, and now you’ve automated it all away. Sometimes it’s enough to sit down for 30 and really think things through + consider it. Sometimes that process takes days + discussions because something new is possible to build and that thing is not super obvious, but you still need to ship the original thing that the grunt work was the foundation for. So you immediately go to shipping, and haven’t spent the time to understand the novel insight yet. You have a tactical win (you shipped way faster than you could before) but you don’t have as much time to understand what it means for your business, which is important and drives long term compounding.

Synthesis is the bottleneck simply because in the past your capacity to produce, and to synthesize, and to understand might not have been matched, but they remained in the same ZIP Code.[1] Now your capacity to produce has dramatically outstripped your capacity to synthesize, and as a consequence, we might be moving to a world where you have more results than understanding.

Voice, IDEs & Coding Agents

One other surprise [4] that I didn’t expect has been that voice has actually been pretty transformational. I can’t tell exactly why this is or the human psychology of it. It is often easier to just vocally describe something in natural language, than it is to type it out as a first pass. Following that, I often use a planning agent to ask a bunch of clarifying questions, which I can later use to refine. This combination helps solve the blank canvas problem; you can start iterating your output with a disorganized thought, which is much less effort than you could previously.

The tradeoff is, you don’t necessarily always get to the level of precision that you would get if you really sat down and thought about the task that you’re working on. But you can get to 80% precision, and that is surprisingly very effective, and often yields results that will help refine your path.

I mentioned earlier that I’ve been using IDEs & Coding Agents quite a lot. I think one of the ways in which we don’t appreciate what’s happening in market is that in the old world tools like VS Code really were tools for coders. In the current world, IDEs like cursor and conductor have become tools for anyone who does structured work. They make it easy to flexibly generate and manipulate structured data, relate it to unstructured data, aggregate new data sources quickly, and process large amounts of unstructured data quickly. If you’ve ever worked with people who are expert excel users like investment bankers, it’s clear that spreadsheet use has a lot of conceptual overlap with coding. Interacting with tabular data through a coding agent + IDE feels like being able to get insane throughput out of a spreadsheet without needing to be an s-tier excel jockey. I wouldn’t be surprised if the thing that replaces Excel (or even productivity suites like MS Office or Google Suite) is not another suite, but a coding agent + IDE instead as this dynamic becomes more pronounced because agents become more powerful, and more people realize what’s possible.

[1] I’m pretty convinced my experience can generalize because I don’t think any of the things I’ve learned are special - I just happened to try them and they happened to work better and faster and more effectively than I expected. So my assumption is many other people are experiencing this or will soon, and as a consequence many people are currently executing far below their capacity and don’t know it (and this gap expands every day).

[2] It’s possible that my capacity for synthesis will also expand over time. Just hasn’t happened yet, and not sure how to more rapidly read, think etc.

[3] Also if you happen to be thoughtful about how to get better at the process of synthesis, I’d love to chat or read whatever you’ve got.

[4] 2 other surprises/observations; first, the tool you use/interface really matters. I find I’m much more able to prototype and execute on browser scripting workflows in cursor than in something like conductor, but anything that doesnt require a browser is far easier to interact with claude code directly in conductor. Makes me suspect that being willing to retry in a new tool/IDE is going to be important. Second, the Cursor browser tool points to something I suspect is gonna happen this year, which is that there is a new prosumer/professional browser to be built with native claude code integration, and whoever wins it will win a new emerging user who is browsing for a semi professional reasons but can scale a real amount of volume because they can now build automations that weren’t possible before.

—

Unrelated - I’m hiring at Substrate. If you’re load bearing and interested in working on thorny, deep in the stack RCM problems, learn more here.

'In the old world you had some grunt work that you had to do. That grunt work took time, and as you spent time in the weeds and details, you were kind of continuously synthesizing what you’d learned over and over again.'

I found this to be true for me recently with reviewing a huge customer complaint spreadsheet. Reading through the feedback myself helped things click into place in real time. While the themes and prompting summaries were technically accurate I was not synthesizing it the same way yet. Maybe would be different if I was already more familiar with the feedback and product instead of trying to synthesize first from a llm summary.

Thanks for sharing. The framing is very helpful and I've had similar thoughts working as an engineer.

As we move up an abstraction (from python to technical English), it reminds me of the Feynman quote: "What I can't create, I do not understand". As you work, synthesis comes on the path to creation. Now you're creating at high throughout, but having to invest more retroactive effort to understand if / how your creations are useful.

A related idea I've been having is how personality / aptitudes shape people's choice of role. Many python programmers today would probably have written technical English as business analysts historically. We're in an interesting moment where the roles are being redefined, and the same genetic profiles are being re-sorted.

If AI's are creating so rapidly, what abstractions do humans need to operate at to be productive... My sense is we're all destined for QA 😅